If you’re a flash buff, it’s hard to escape the direction Adobe is taking with their newest flash player introducing the Stage 3D capabilities (previously codenamed “Molehill”). Molehill is a new platform used for low-level GPU-accelerated APIs which will enable support across multiple screens and devices. For those Flash 3D aficionados out there, what does this mean? It means our world is about to change… Cube textures, z-buffering, fragmented vertex shaders… And for the layman, here’s some numbers that just make you say wow:

- Previous flash players supported 4-8 thousand polygons

- Molehill has been stress-testing supporting millions of polygons

But diving into the guts of the API isn’t for the faint hearted. Coming from the development world, we’re all familiar with the ‘Hello World’ examples… so how about a ‘Hello Triangle’ example? The following code snippet was authored by Ryan Speets. This is an incomplete example of a simple triangle and square on the screen.

public function myContext3DHandler ( event : Event ) : void {

var stage3D:Stage3D;

var vertexShaderAssembler:AGALMiniAssembler;

var fragmentShaderAssembler:AGALMiniAssembler;

stage3D = event.target as Stage3D;

context = stage3D.context3D;

context.configureBackBuffer(640, 480, 4, true);

// Set up triangle's buffers

trianglevertexbuffer = context.createVertexBuffer(3, 6);

trianglevertexbuffer.uploadFromVector ( Vector.<Number>([

0, 1, 0, 1,0,0,

-1,-1, 0, 0,1,0,

1,-1, 0, 0,0,1

]),0, 3 );

triangleindexbuffer = context.createIndexBuffer(3);

triangleindexbuffer.uploadFromVector(Vector.<uint>([0, 1, 2]), 0, 3);

// Set up square's buffers

squarevertexbuffer = context.createVertexBuffer(4, 6);

squarevertexbuffer.uploadFromVector ( Vector.<Number>([

-1, 1, 0, 0.5,0.5,1.0,

1, 1, 0, 0.5,0.5,1.0,

1,-1, 0, 0.5,0.5,1.0,

-1,-1, 0, 0.5,0.5,1.0

]),0, 4 );

squareindexbuffer = context.createIndexBuffer(6);

squareindexbuffer.uploadFromVector(Vector.<uint>([0, 1, 2, 0, 2, 3]), 0, 6);

// Assemble shaders

vertexShaderAssembler = new AGALMiniAssembler();

vertexShaderAssembler.assemble( Context3DProgramType.VERTEX,

"m44 vt0, va0, vc0 \n" +

"m44 op, vt0, vc4 \n" +

"mov v0, va1"

);

fragmentShaderAssembler = new AGALMiniAssembler();

fragmentShaderAssembler.assemble( Context3DProgramType.FRAGMENT,

"mov oc, v0\n"

);

// Upload and set active the shaders

program = context.createProgram();

program.upload(vertexShaderAssembler.agalcode, fragmentShaderAssembler.agalcode);

context.setProgram(program);

this.addEventListener(Event.ENTER_FRAME, enterFrame)

}Whatever happened to var triangle:Triangle = new Triangle()? As mentioned earlier, Molehill is a low-level API (and they mean really low). Fortunately there are a number of robust frameworks freely available that are compatible with Molehill and abstract much of the complexity of the Stage3D away from us so we can focus on doing “funner” stuff. What are some of these frameworks?- Proscenium (Written by Adobe)

- Flare3D (http://www.flare3d.com/)

- Alternativa3D (http://alternativaplatform.com/en/alternativa3d/)

- Sophie3D (http://www.sophie3d.com/website/index_en.php)

- Yogurt3D (http://www.yogurt3d.com/)

- Frima (http://frimastudio.com/)

- Away3D (http://away3d.com/)

- Coppercube (http://www.ambiera.com/coppercube/)

- The list goes on and on…

But we’re a GIS Technology company and so we started asking ourselves how these new advances in web-based 3D visualization can help the geospatial community. Really, the possibilities are endless, but here are some of the low hanging fruit:

- Terrain visualization

- Flight simulation

- 3D based COP

- Utility/Pipelines

- Physics simulations

- Development pre-visualization

- Resource geolocation/tracking

- Etc.

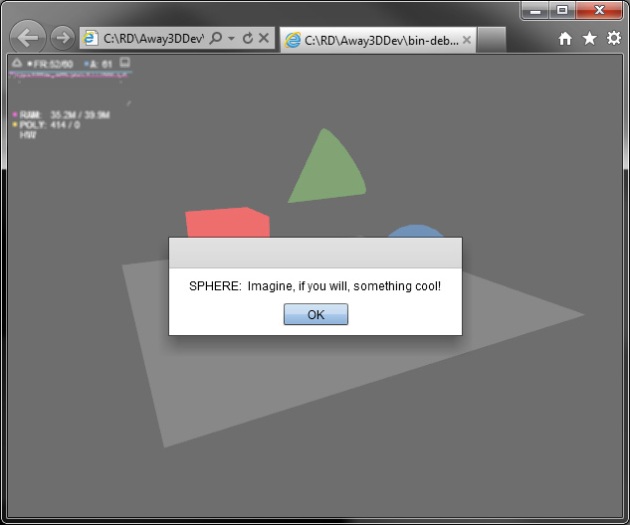

Caption: Who would have thought that simple polygons would get me so excited?

Once the polygons were in place I thought it might be beneficial if we could interact with the 3D objects. A simple property change (mouseEnabled = true) and an extra EventListener and voila, we now have interactive 3D objects.

Caption: Imagine, if you will, something cool!

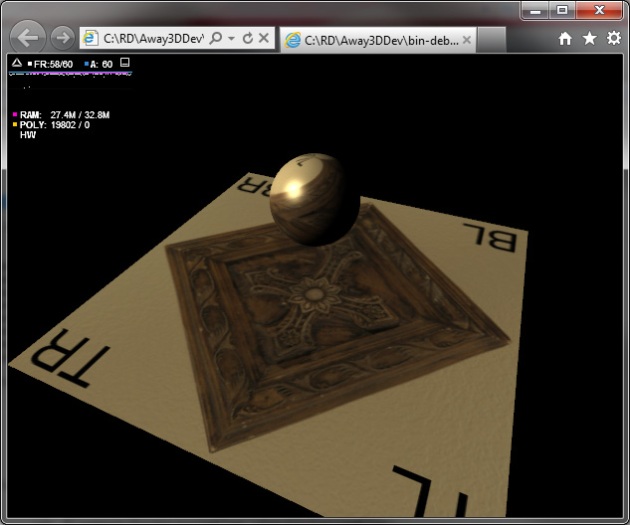

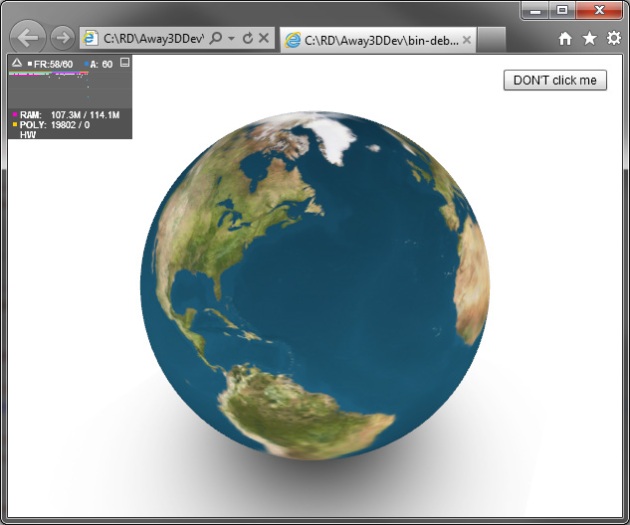

Then I asked myself what would a 3D scene be without a little mood lighting and textures? Away3D supports a number of light and material options including DirectionalLight, Point Light, BitmapMaterial, ColorMaterial, VideoMaterial, etc. Notice the Poly count listed on the statistics in the upper left corner… 19802 polygons. That sphere has waaay too many divisions, but take my word for it, manipulating the 3D scene is a smooth as butter.

Caption: Adding a little mood makes everything seem more dramatic.

Next up was the manipulation of 3D objects using embedded audio assets. We’re all familiar with audio equalizers, but what about representing audio frequencies in real-time 3D? The flash.media.SoundMixer class offers useful utilities for introspecting audio playback and tying it to 3D objects makes an interesting demonstration. Not much to see by way of screenshot, but visualize each bar bumping up and down to the rhythm.

Caption: 3D equalizer gives a new meaning to audio visualizations

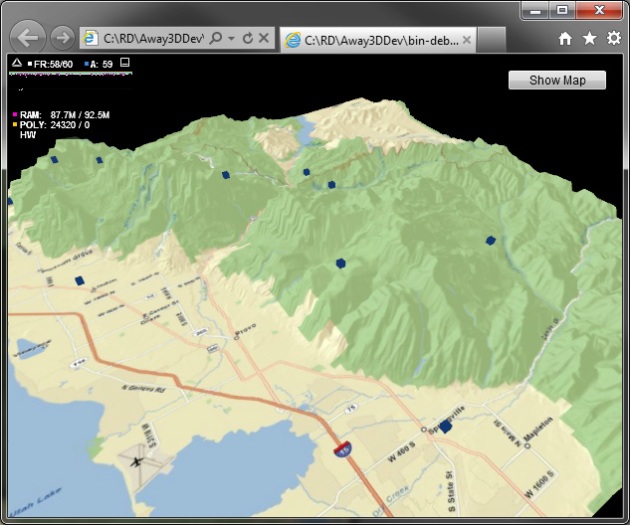

At this point I was ready to begin delving into more geospatial experimentation. What’s the first thing that many of us GIS professionals think of when we think of 3D… TINS, DEMS, elevation. Right? Unfortunately, the following demonstrations don’t represent true elevevations (rather pixel values are converted into relative extrusion heights), but from a visualization perspective the results are impressive (if not accurate). Notice the POLY count on this screenshot… 80,000 polygons without a single hiccup in framerate as I spin the map.

Caption: Pixel colors are converted into relative heights to create the 3D extrusion.

Caption: A closer look

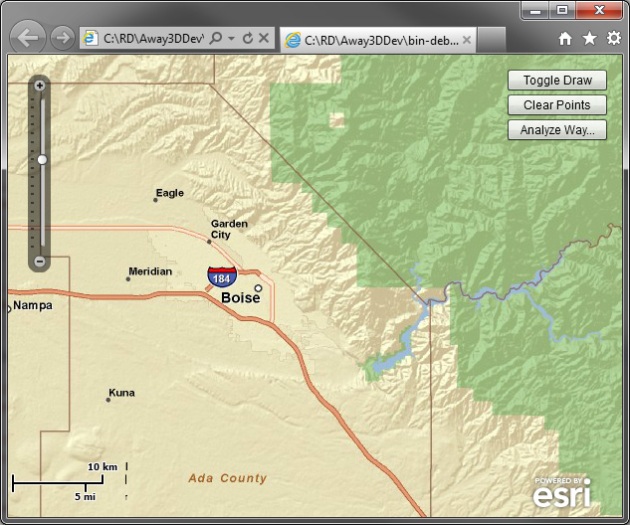

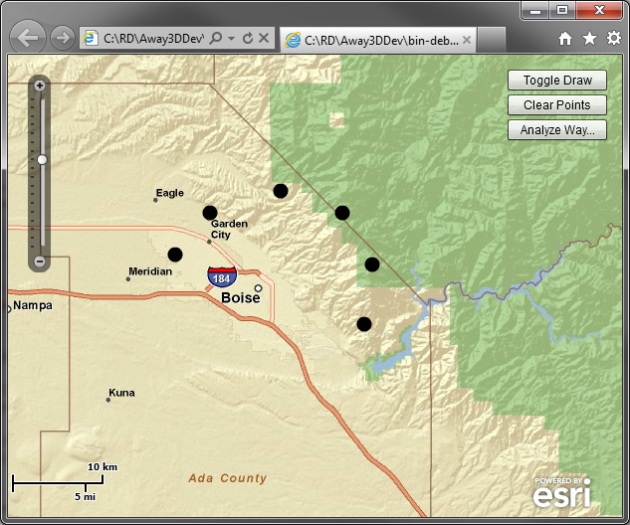

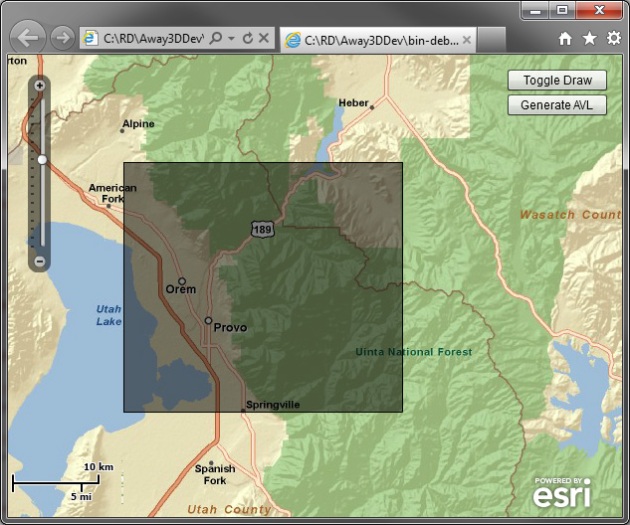

As developers, we’re always looking for ways to create value for our stakeholders. Those familiar with the ESRI development APIs are probably familiar with the ESRI basemaps and the manipulation of graphics via GraphicLayers… now if only this could be extruded into 3D…

Caption: Starting sample application. User may begin sketching their waypoints.

Caption: Ready for 3D visualization

Caption: Elevation and basemap data mashed together to create 3D flight simulation. Red box could easily be swapped out for camera.

In the above concepts, I use three different map services published with ESRI’s ArcGIS server. The first map service uses the ESRI street basemap as simple reference while sketching the waypoints. Once the waypoints are drawn, the application analyzes the extent of the points and exports images from a map service rendering DEM data, as well as ESRI’s topographic basemap. These are mashed together to create the final scene. The red box represents an object that could just as well have been a camera to create a “pilots perspective” flight simulation. Again, the terrain is only a simulation/visualization, and should not be considered representative as true elevation.

Now that I had location on a local level, I started thinking international/global. Can I use the concepts learned while generating local 3D scenes on a much larger scope? You bet! These examples not only demonstrate the ability to load data from external web services dynamically into a 3D scene, but also mapping geographic coordinates to pixel coordinates. We can also attach event listeners to the markers to provide click or tooltip information.

Caption: There’s something about don’t click me buttons that drives people crazy

Caption: Data loaded and converted to pixel-space from external web-service, with tooltip interactivity

The mapping of lat/lon data in real-time 3D reminded me of AVL situations on COP applications. What if you were managing a wildfire in rough terrain and wanted to visualize on-the-ground resources in context of the terrain? In the next experiment, I created a simulated web-service that the 3D scene can poll every XX seconds to update the locations of resources on the ground. The blue resources move over time as their coordinates are updated via a web-service call. All the while I’m zooming in and out of canyons, panning around, etc within the 3D scene. How about real-time FAA flight visualizations?

Caption: Select area to analyze

Caption: See on the ground resource locations in near realtime (simulated from web-service).

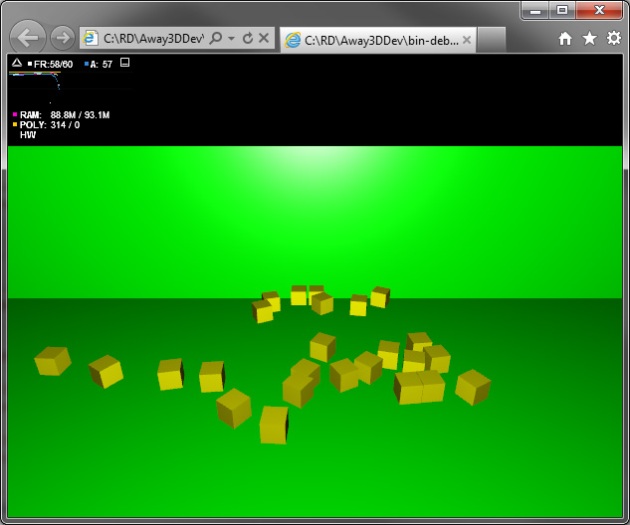

Finally, (and just for the fun of it) I wanted to start testing 3D physics. Adobe has another great project underway that allows users to compile raw C and C++ code into SWF or SWC files that can be embedded in your applications (codename “Alchemy”). Someone in the community was kind enough to compile the Bullet physics engine (http://bulletphysics.org) into a consumable SWC file used in the following demonstration. Not much use for it yet, but it certainly opens the door of possibilities.

Caption: Shooting balls at a wall of cubes could be packaged into a game

Caption: Resulting chaos

Hopefully we can post a demo video up soon, but in the meantime enjoy the provided screenshots/discussion to get you thinking about adding 50% more D to your world.

No comments:

Post a Comment